Mission critical systems, such as water purification systems, require secure and reliable data networks to ensure they perform efficiently, effectively and without interruption. At some of Anglian Water’s sites (GB), there was automation equipment that was installed over 30 years ago. This was problematic, as it did not provide suitable infrastructure for the transition to modern IP based communication networks. For example, replacing the existing programmable logic controllers (PLC), which was required, made them incompatible with the existing data networks and created the need for the entire automation system to be upgraded at the same time. A key benefit of upgrading the system would be an increase in network redundancy and consequently the reliability of their service for customers. It would also provide Anglian Water with holistic monitoring of the system and access to real time data.

In the autumn of 2019, Anglian Water wanted to upgrade the data networks for one of their sites and enlisted the help of Westermo. The network connected ten remote pumps back to a main water plant near Kings Lynn using a series of RS485 cables. One option was to simply replace the existing ageing data communications equipment with similar devices, but that would not satisfy the requirement for network redundancy and modernisation. In addition, the company wanted to use the existing cables to minimise the cost of the project and to implement a 4G network to provide communication redundancy.

Collaboration on this project started when engineers from Anglian Water attended a Westermo Certified Engineer training course to help them gain the knowledge needed to upgrade their existing data communications network. This led to the development of a network diagram to determine the full scope and significance of this specific project, and it was decided that they would get support from Westermo to design and configure the network.

Network design and installation phases

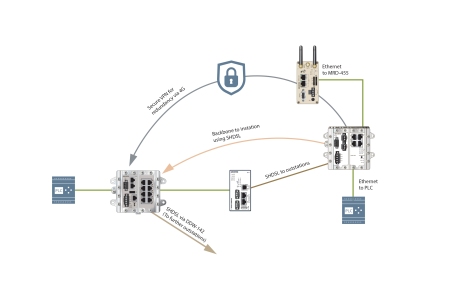

Working with Anglian Water engineers, Westermo designed a data network that utilised the existing multiplexer network and RS-485 cables to provide a backbone for Ethernet communication, and added a new 4G cellular network to provide redundancy. With this design, the network could continue to function using the 4G communications and a primary link could be added as they went, allowing commissioning, testing and firewalls to be implemented on a `per site’ basis. Once the single-pair high-speed digital subscriber line (SHDSL) was operating, the Westermo Wolverine line extenders would automatically reconfigure the network to operate via the SHDSL.

The importance of this project was evident throughout the design phase due to it supporting a live, mission critical system. The solution needed to be implemented within a tight schedule and start immediately without any issues. Collaboration was key to properly understand the network requirements. Most importantly, the remote pumping stations needed to remain fully operational during commissioning.

The network is constructed predominantly of Westermo WeOS devices, which are using the non-propriety open shortest path first (OSPF) routing protocol and support virtual private networks (VPN). OSPF provides a mechanism to select different paths for network traffic and the VPN provides an added layer of security for the network. This complex setup required a clear understanding of the network structure to determine how the data will be routed should there be a failure at any point on the SHDSL.

To help the customer commission the network, Westermo provided supporting documentation, which was produced using Westermo’s WeConfig software configuration tool. The report function of the software pulled together the whole network to present a clear overview, providing confidence during testing and commissioning stages. WeConfig also allowed a full backup of the whole site in one sweep, and a detailed overview of physical connections.

Each site required Anglian Water to install new external antennas to provide them with suitable 4G coverage. Once the 4G network was available and operating, this allowed the twisted pair cables to be updated one at a time. Once the SHDSL links were commissioned, the network could reroute traffic, via the SHDSL backbone to Anglian Water’s central site.

Unexpected circumstances during commissioning

The 4G network was included in the plan simply to provide redundancy, but it offered an unexpected benefit. During commissioning of the network, the global COVID-19 pandemic hit the world. This made it very difficult for support personnel to go on-site safely and accelerated the need for remote management to monitor the sites.

Fortunately, the new network was designed and configured to enable remote access, using the 4G communications. Originally this was to support network management tasks, but this also provided a method for engineers to remotely connect to the network from within the Anglian Water system to assist with any technical issues during and after the network was configured. Westermo technical engineers were able to provide support to the customer, via this method, ensuring the network was up and running and all members of staff involved were safe.

Foto Jonas Bilberg

Monitoring the System

In addition to designing the network and configuring the network devices, Westermo also configured the ability to monitor the 4G communications and SHDSL port status using Simple Network Management Protocol (SNMP). By monitoring the network, Anglian Water could determine if the connection was using the primary Ethernet backbone or 4G cellular network. This helps them understand if a fault has occurred on one of the SHDSL lines.

Additional support

Once the network was in place and operating, firewalls were added. Due to the pandemic, it was not possible to have support on-site when adding the firewalls, which meant remote support was the only option. Every firewall modification had to be carefully planned to ensure that support engineers were not locked out during testing. Configuration of the firewalls was performed using commands via the 4G communications. Configurations of the firewalls was completed at the central site first to ensure that communication to all of the remote sites was working correctly, before rolling out a full secure network. The remote sites were then added one at a time to reduce risk. Implementing the firewalls was challenging both for Anglian Water and Westermo, with every command needing to be accurate. Ultimately, configuration of the firewalls was successful and going forward has provided the customer confidence that the network is secure.

“We felt fully supported by Westermo throughout design and installation. We are delighted we now have an upgraded, supportable system in place and that this was achieved during extremely challenging times. Collaboration between all parties has made this a success”, explained Charlie Pritchard, Infrastructure Project Manager at Anglian Water.

Products used in application

In total, twelve Wolverine DDW-142 and DDW-225 line extenders were installed to enable the existing cables to be reused to create effective Ethernet networks over long distances to the remote sites. The Wolverines use SHDSL technology, which makes it possible to reuse many types of pre-existing copper cables which can lead to considerable financial savings. All Wolverine devices are powered by Westermo’s WeOS operating system, which enables complex networking functions to be configured easily.

MRD-455

Ten of Westermo’s MRD-455 routers were installed, one at each site, to create the 4G network. As well as forming the 4G network, the cellular routers provide a gateway to the IP network, and a unique method for port forwarding to allow remote support and monitoring. A Westermo RedFox RFI-211-T3G industrial routing switch was installed at the central site, providing the necessary layer 3 functionality required for this type of application. All the Westermo devices were delivered pre-configured to save time and reduce project risk.

Result

Once installed, the network immediately operated correctly, which can be attributed to the careful planning and collaboration between Anglian Water and Westermo. Despite the challenges caused by the COVID-19 pandemic, Westermo was able to develop a stable, secure and ultra-robust network with remote access support as an alternative to on-site support. This has ensured the network has operated smoothly without any interruptions. As a result of this successful network upgrade, the local area can continue to enjoy clean and uninterrupted water supply every day.

Posted by Eoin Ó Riain

Posted by Eoin Ó Riain

Zero-defect production is the goal. But how can it be guaranteed that only flawless products leave the production line? In order to make quality inspection as efficient, simple, reliable and cost-effective as possible, the German company sentin GmbH develops solutions that use deep learning and industrial cameras from IDS to enable fast and robust error detection. A sentin VISION system uses AI-based recognition software and can be trained using a few sample images. Together with a GigE Vision CMOS industrial camera from

Zero-defect production is the goal. But how can it be guaranteed that only flawless products leave the production line? In order to make quality inspection as efficient, simple, reliable and cost-effective as possible, the German company sentin GmbH develops solutions that use deep learning and industrial cameras from IDS to enable fast and robust error detection. A sentin VISION system uses AI-based recognition software and can be trained using a few sample images. Together with a GigE Vision CMOS industrial camera from  The system is capable of segmenting objects, patterns and even defects. Even surfaces that are difficult to detect cannot stop the system. Classical applications can be found, for example, in the automotive industry (defect detection on metallic surfaces) or in the ceramics industry (defect detection by making dents visible on reflecting and mirroring surfaces), but also in the food industry (object and pattern recognition).

The system is capable of segmenting objects, patterns and even defects. Even surfaces that are difficult to detect cannot stop the system. Classical applications can be found, for example, in the automotive industry (defect detection on metallic surfaces) or in the ceramics industry (defect detection by making dents visible on reflecting and mirroring surfaces), but also in the food industry (object and pattern recognition).

A thermal imaging camera can be an effective screening device for detecting individuals with an elevated skin temperature. This type of monitoring can provide useful information when used as a screening tool in high-traffic areas to help identify people with an elevated temperature compared to the general population. That individual can then be further screened using other body temperature measuring tools.

A thermal imaging camera can be an effective screening device for detecting individuals with an elevated skin temperature. This type of monitoring can provide useful information when used as a screening tool in high-traffic areas to help identify people with an elevated temperature compared to the general population. That individual can then be further screened using other body temperature measuring tools.

The Environment Agency uses two main types of continuous water quality monitors; a fixed, cabinet or kiosk based system (right), and a portable version which is housed in a rugged case (below). Evidence from these systems is utilised by environment planners, ecologists, fisheries and environment management teams across the agency. These continuous water quality monitoring systems have been developed and refined over the last 20 years, so that they can be quickly and easily deployed at almost any national location; delivering data via telemetry within minutes of installation. This high-intensity monitoring capability substantially improves the temporal and spatial quality of data. The rapid deployment of these monitors now enables the agency to respond more quickly to pollution events.

The Environment Agency uses two main types of continuous water quality monitors; a fixed, cabinet or kiosk based system (right), and a portable version which is housed in a rugged case (below). Evidence from these systems is utilised by environment planners, ecologists, fisheries and environment management teams across the agency. These continuous water quality monitoring systems have been developed and refined over the last 20 years, so that they can be quickly and easily deployed at almost any national location; delivering data via telemetry within minutes of installation. This high-intensity monitoring capability substantially improves the temporal and spatial quality of data. The rapid deployment of these monitors now enables the agency to respond more quickly to pollution events.

As part of this NOC ongoing program, the tide gauges’ main datalogger and transmitter have been upgraded to incorporate OTT’s new Sutron SatLink3. The first site to receive this upgrade was the Vernadsky station located in Antarctica, which is now operated by Ukrainian scientists and is soon to be followed by the tide gauge at King Edward point, on the South Georgia islands.

As part of this NOC ongoing program, the tide gauges’ main datalogger and transmitter have been upgraded to incorporate OTT’s new Sutron SatLink3. The first site to receive this upgrade was the Vernadsky station located in Antarctica, which is now operated by Ukrainian scientists and is soon to be followed by the tide gauge at King Edward point, on the South Georgia islands.

One in three British adults see a key role for the use of robotics in tackling the COVID-19 crisis and future pandemics, research released today reveals. The public poll*, commissioned by the

One in three British adults see a key role for the use of robotics in tackling the COVID-19 crisis and future pandemics, research released today reveals. The public poll*, commissioned by the